Artificial intelligence (AI) continues to reshape industries, and national defense is no exception. OpenAI recently announced a partnership with Anduril, a defense-focused startup. Together, they aim to provide cutting-edge AI solutions to the U.S. military. This collaboration highlights the growing influence of AI in modern warfare and raises important ethical questions.

OpenAI and Anduril: A Powerful Partnership

OpenAI, known for its innovations like ChatGPT, is now venturing into defense technology. Anduril, a startup focused on autonomous defense systems, has already made waves in the industry. Their products include AI-driven drones and surveillance tools.

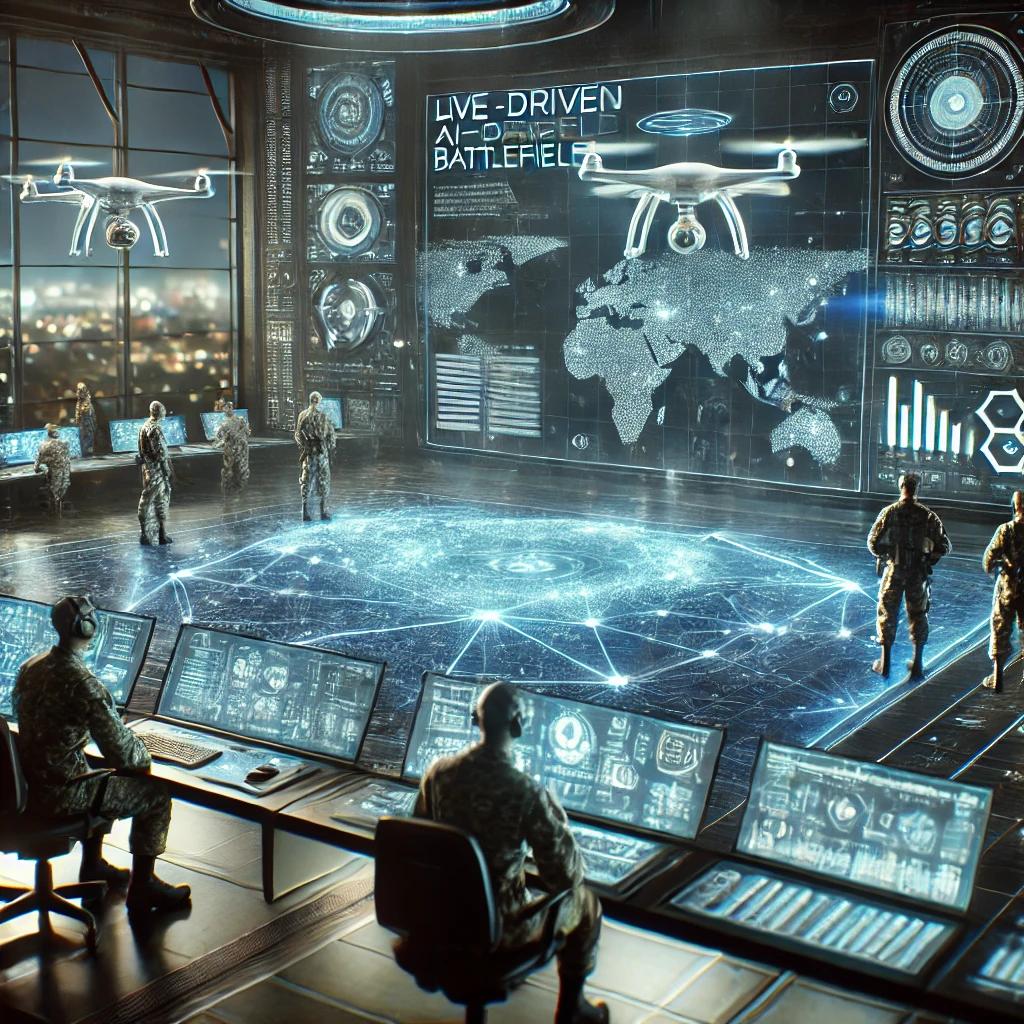

By partnering, these two companies aim to leverage their strengths. OpenAI brings advanced machine learning models, while Anduril offers expertise in military applications. This collaboration signals a significant shift in how AI is being integrated into defense strategies. According to Wired, their focus will likely include improving autonomous systems and analyzing battlefield data.

Why AI in Defense?

The defense sector has always been an early adopter of technology. AI’s ability to process vast amounts of data makes it a game-changer. It can enhance decision-making, improve accuracy, and reduce human error.

For example, AI systems can analyze satellite images faster than humans. They can identify threats, predict enemy movements, and suggest tactical responses. The efficiency of these tools can save lives on the battlefield. A Brookings Institution report notes that AI is increasingly essential for maintaining global military power.

Ethical Concerns and Controversy

However, this partnership raises ethical concerns. OpenAI’s mission has long been to ensure AI benefits all of humanity. Critics argue that using AI for military purposes contradicts this goal. Deploying AI in warfare could lead to unintended consequences, including civilian casualties.

Moreover, there are concerns about accountability. Who is responsible if an AI-driven weapon makes a mistake? The international community has yet to establish clear guidelines. As AI becomes more integrated into defense, these questions will only grow louder.

A recent article in The Guardian outlines the ethical dilemmas posed by militarized AI. It emphasizes the need for transparency and regulation.

What Does This Mean for the Future?

OpenAI’s partnership with Anduril could accelerate AI adoption in defense worldwide. Other countries may feel pressured to keep up, leading to an arms race in AI technology. This development will likely increase the complexity of global defense strategies.

At the same time, it presents opportunities. If used responsibly, AI can minimize risks and enhance security. However, achieving this balance will require collaboration between governments, tech companies, and ethical oversight bodies.

Conclusion

OpenAI’s move into the defense sector marks a pivotal moment for AI technology. By partnering with Anduril, they are pushing the boundaries of what AI can achieve. However, this comes with significant ethical challenges. As the world watches, it’s crucial to ensure that AI in defense serves to protect, not harm, humanity.

For more insights into this topic, explore Wired’s in-depth analysis and stay updated on the latest tech developments.

What are your thoughts on AI’s role in defense? Share your comments below!